Every Monday, L&D teams open their LMS dashboard hoping for clarity.

Instead, they find charts. Completion percentages. Login counts. Heatmaps showing activity across departments.

All data. No answers.

The questions remain the same:

- What should we fix first?

- Why is engagement dropping in Sales but rising in Support?

- Which content should we retire, and which deserves promotion?

Most learning platforms mistake measurement for understanding. They show you what happened without explaining why it matters or what changed.

This isn’t a reporting problem. It’s an intelligence problem.

The Insight Gap

Traditional LMS analytics operate on a simple assumption: if we show you enough data, you’ll figure out what to do.

But L&D teams aren’t data scientists. They’re managing onboarding, compliance, skill development, and culture programs, often simultaneously. They don’t have time to build pivot tables or compare week-over-week trends manually.

What they need isn’t more dashboards. It’s automated intelligence that surfaces meaning before they have to ask.

This is where truly AI-native platforms diverge from AI-branded ones.

AI-Native vs. AI-Layered: The Real Difference

Many LMS platforms add a chatbot or basic content recommendations and call themselves “AI-powered.” That’s AI layered on top of a traditional system.

An AI-native LMS is different by design:

- Intelligence isn’t a feature, it’s the foundation

- The system continuously observes learning behavior across your entire organization

- Patterns emerge automatically, without manual configuration

- Insights arrive before you realize you need them

But here’s what most vendors get wrong: they jump straight to automation, sending notifications, triggering workflows, making recommendations, before building real understanding.

Beetsol deliberately starts with pure insight. No premature actions. No rules you didn’t ask for. Just intelligent observation that helps you see what’s actually happening.

Why? Because automation without understanding creates noise, not value.

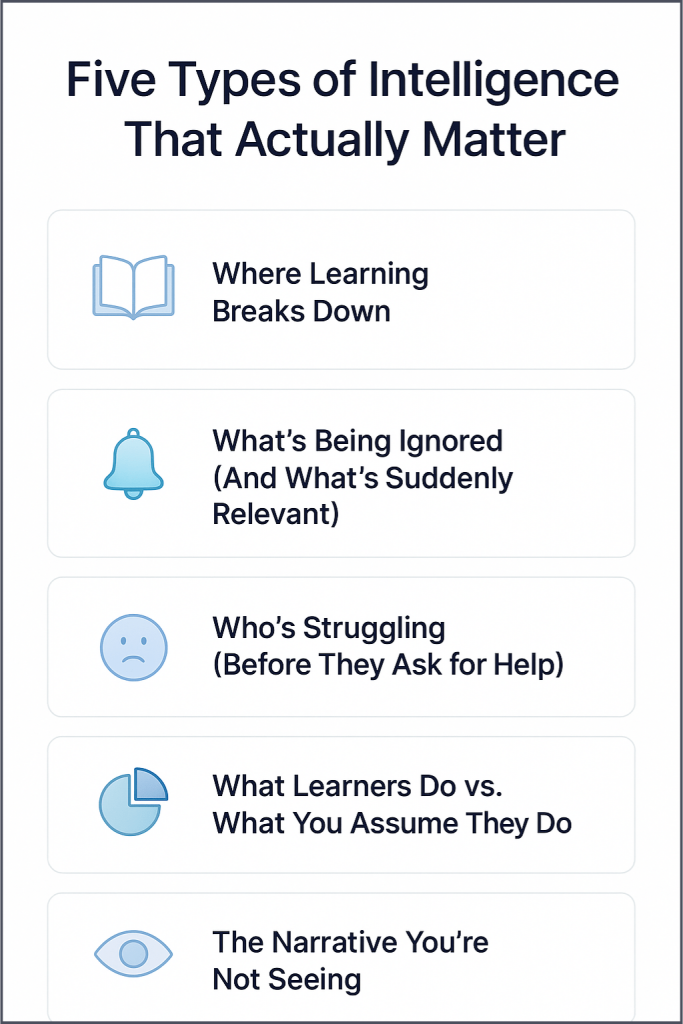

Five Types of Intelligence That Actually Matter

1. Where Learning Breaks Down

What the system detects:

- Exact timestamps where learners abandon modules

- Sections that trigger repeated rewatching

- Content that takes 3-4x longer than expected to complete

Why it matters: When 60% of learners drop off at the 12-minute mark in your product training, you don’t need to guess. You know exactly where the content fails.

Instead of rebuilding entire courses, you fix the specific moment where clarity breaks down.

Real example: A team discovered a single confusing slide was causing a 42% drop-off. Fixing that one slide reduced repeat support questions by 22% the following month.

2. What’s Being Ignored (And What’s Suddenly Relevant)

What the system detects:

- Content with zero engagement over 60 days

- Modules seeing sudden spikes in completions

- Skills or topics gaining momentum without promotion

Why it matters: A lean LMS is an effective LMS. When you can see that 12 modules have under 5% views in 30 days, you can confidently archive or refresh them.

Conversely, when a compliance module sees a 63% jump in completions, that’s a signal, maybe a policy changed, or a new regulation is driving urgency.

The insight isn’t just “what’s popular.” It’s “what’s changing, and why now?”

3. Who’s Struggling (Before They Ask for Help)

What the system detects:

- Learners who started strong but went silent for weeks

- Cohorts performing below organizational average

- Repeated assessment failures on specific questions

Why it matters: Some teams need different support. If your Sales team shows 18% engagement while the company average is 37%, that’s not a motivation problem, it’s a contextual one.

Maybe the timing is wrong. Maybe the content isn’t relevant to their workflow. Maybe they’re drowning in quota pressure.

Early detection means early intervention, not post-mortem analysis during quarterly reviews.

4. What Learners Do vs. What You Assume They Do

What the system detects:

- Modules marked “complete” but only 40% actually viewed

- Sections replayed multiple times (comprehension signals)

- Content accessed but never finished

Why it matters: Completion rates lie. A learner who clicks through slides to reach “complete” looks the same as one who genuinely engaged.

But when the system tracks behavior, rewatching, backtracking, time spent, it reveals the truth: where understanding actually happened, and where it didn’t.

This separates real learning from checkbox compliance.

5. The Narrative You’re Not Seeing

What the system generates:

- Weekly summaries highlighting the three most meaningful changes

- Plain-language explanations of what’s evolving and why

- Pattern recognition across seemingly unrelated signals

Why it matters: Raw data is powerful. Narrative makes it usable.

Instead of logging into a dashboard and wondering “what should I look at today?”, you receive a synthesis:

“Engagement in the Product team increased 18% this week, driven primarily by the new API training module launched Monday. Meanwhile, the Leadership cohort shows declining activity for the third consecutive week, likely tied to Q4 planning cycles.”

This is intelligence, not reporting. The system tells you what changed, why it likely matters, and where your attention should go.

What Learners Experience: Nudges, Not Analytics

Here’s a critical principle: learners should never see dashboards or reports.

They should experience timely, contextual nudges that improve momentum without adding cognitive load.

Beetsol’s learner-facing insights are designed to feel helpful, never controlling:

- Progress nudges: “You’re 8 minutes away from finishing this module”

- Inactivity reminders: “You started this last Tuesday, want to pick up where you left off?”

- Micro-celebrations: Small wins acknowledged at key milestones

- Contextual encouragement: “Most people finish this section in about 10 minutes”

- Time-budget clarity: “These three modules take less than 15 minutes total”

The goal isn’t to gamify learning. It’s to reduce friction and normalize effort at moments when learners might otherwise give up.

Why “Insights Before Actions” Is the Smartest Strategy

Most AI-powered tools rush to automate: send this notification, recommend this course, trigger this workflow.

Beetsol takes a different approach: observe first, act later.

Why?

- Trust is built through accuracy, not speed: When insights are consistently correct, teams trust the system. When automation fires prematurely, it creates skepticism.

- Understanding compounds over time: Every week, the system learns more about your organization’s patterns. That intelligence makes future recommendations far more precise.

- Admins stay in control: Instead of feeling overridden by automation, L&D teams gain clarity and confidence in their decision-making.

This isn’t a limitation, it’s a design philosophy. Beetsol prioritizes what the system knows with certainty before ever suggesting what to do next.

And when the platform is ready to recommend actions? It will do so with precision earned through continuous observation, not guesswork.

What This Looks Like in Practice

Let’s say you’re managing learning for a 300-person company. Here’s what a Monday morning might look like with an AI-native LMS:

9:00 AM – You open Beetsol

Instead of blank dashboards, you see:

Weekly Insight Summary

- Rising: Communication skills content up 44% this week

- Stalling: Customer Success onboarding—3 new hires haven’t progressed past module 2 in 6 days

- Invisible gap detected: Your “Handling Objections” training has a 58% drop-off at the 14-minute mark

- Suggested focus: Check module 2 blockers in CS onboarding, consider revising the Objections training mid-section

9:05 AM – You investigate the CS onboarding stall

The system shows you exactly where those three learners stopped. All three paused at the same step: “Setting Up Your CRM Workspace.”

You reach out. Turns out, new hires weren’t given CRM access yet. IT bottleneck, not content issue.

9:15 AM – You review the “Handling Objections” drop-off

The system flags the exact timestamp: 14:03. You watch that section. There’s a dense slide with seven bullet points and no examples.

You replace it with a 90-second scenario video. The following week, drop-off at that point falls to 12%.

This is what insight-driven learning looks like. Problems surface automatically. Solutions become obvious. Guesswork disappears.

The Difference Between Watching and Understanding

Here’s the core truth about AI in learning platforms:

Every LMS can track what learners click. Very few can explain what it means.

Beetsol doesn’t just log behavior. It interprets patterns, detects anomalies, and surfaces what actually matters, all without requiring you to become a data analyst.

This is what separates systems built for scale from systems built for understanding.

And in a world where L&D teams are leaner, busier, and expected to prove ROI with fewer resources, understanding is the competitive advantage.

What Happens Next

The future of enterprise learning isn’t louder dashboards or more notifications.

It’s quiet intelligence, systems that observe, understand, and explain before they act.

Beetsol’s AI-native LMS proves that real innovation doesn’t start with automation. It starts with insight.

And when the time comes to take action? The system will recommend with precision, not guesswork.

Because it spent the time understanding first.

Want to see how insight-driven learning works with your team’s content?

Explore a pilot with Beetsol →